Source code for http://arxiv.org/abs/1502.03492

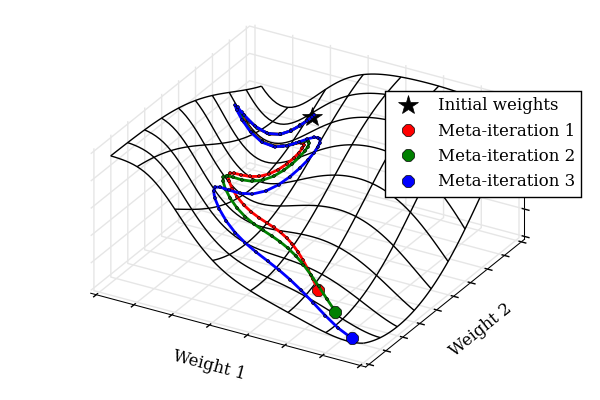

Tuning hyperparameters of learning algorithms is hard because gradients are usually unavailable. We compute exact gradients of cross-validation performance with respect to all hyperparameters by chaining derivatives backwards through the entire training procedure. These gradients allow us to optimize thousands of hyperparameters, including step-size and momentum schedules, weight initialization distributions, richly parameterized regularization schemes, and neural network architectures. We compute hyperparameter gradients by exactly reversing the dynamics of stochastic gradient descent with momentum.

Authors: Dougal Maclaurin, David Duvenaud, and Ryan P. Adams

Feel free to email us with any questions at ([email protected]), ([email protected]).

For a look at some directions that didn't pan out, take a look at our early research log.